Image resize and quality comparison26 July 2024 | 1:20 pm

Image resize and quality comparison

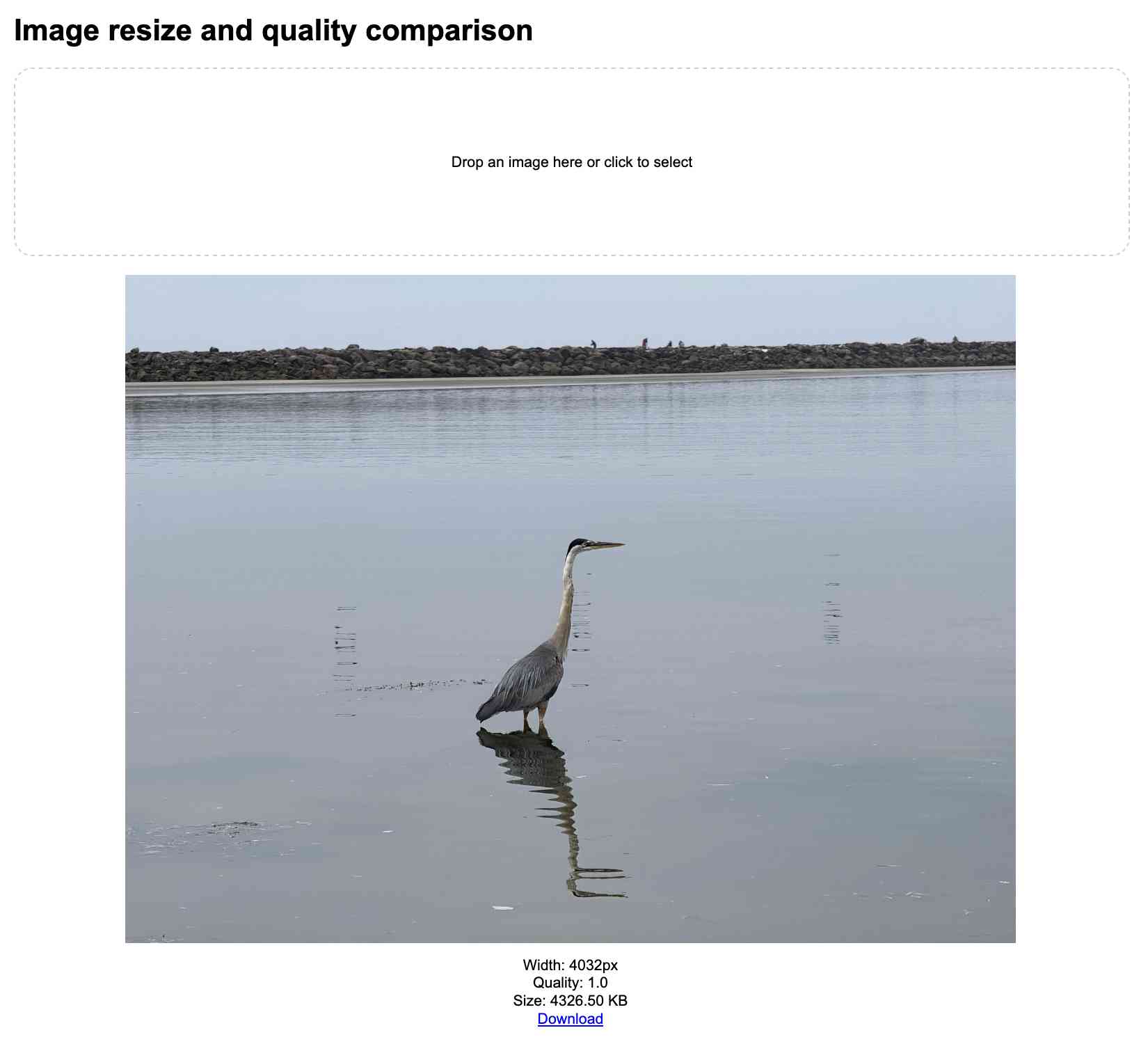

Another tiny tool I built with Claude 3.5 Sonnet and Artifacts. This one lets you select an image (or drag-drop one onto an area) and then displays that same image as a JPEG at 1, 0.9, 0.7, 0.5, 0.3 quality settings, then again but with at half the width. Each image shows its size in KB and can be downloaded directly from the page.

I'm trying to use more images on my blog (example 1, example 2) and I like to reduce their file size and quality while keeping them legible.

The prompt sequence I used for this was:

Build an artifact (no React) that I can drop an image onto and it presents that image resized to different JPEG quality levels, each with a download link

Claude produced this initial artifact. I followed up with:

change it so that for any image it provides it in the following:

- original width, full quality

- original width, 0.9 quality

- original width, 0.7 quality

- original width, 0.5 quality

- original width, 0.3 quality

- half width - same array of qualities

For each image clicking it should toggle its display to full width and then back to max-width of 80%

Images should show their size in KB

Claude produced this v2.

I tweaked it a tiny bit (modifying how full-width images are displayed) - the final source code is available here. I'm hosting it on my own site which means the Download links work correctly - when hosted on claude.site Claude's CSP headers prevent those from functioning.

Tags: ai-assisted-programming, claude, tools, projects, generative-ai, ai, llms

Did you know about Instruments?26 July 2024 | 1:06 pm

Did you know about Instruments?

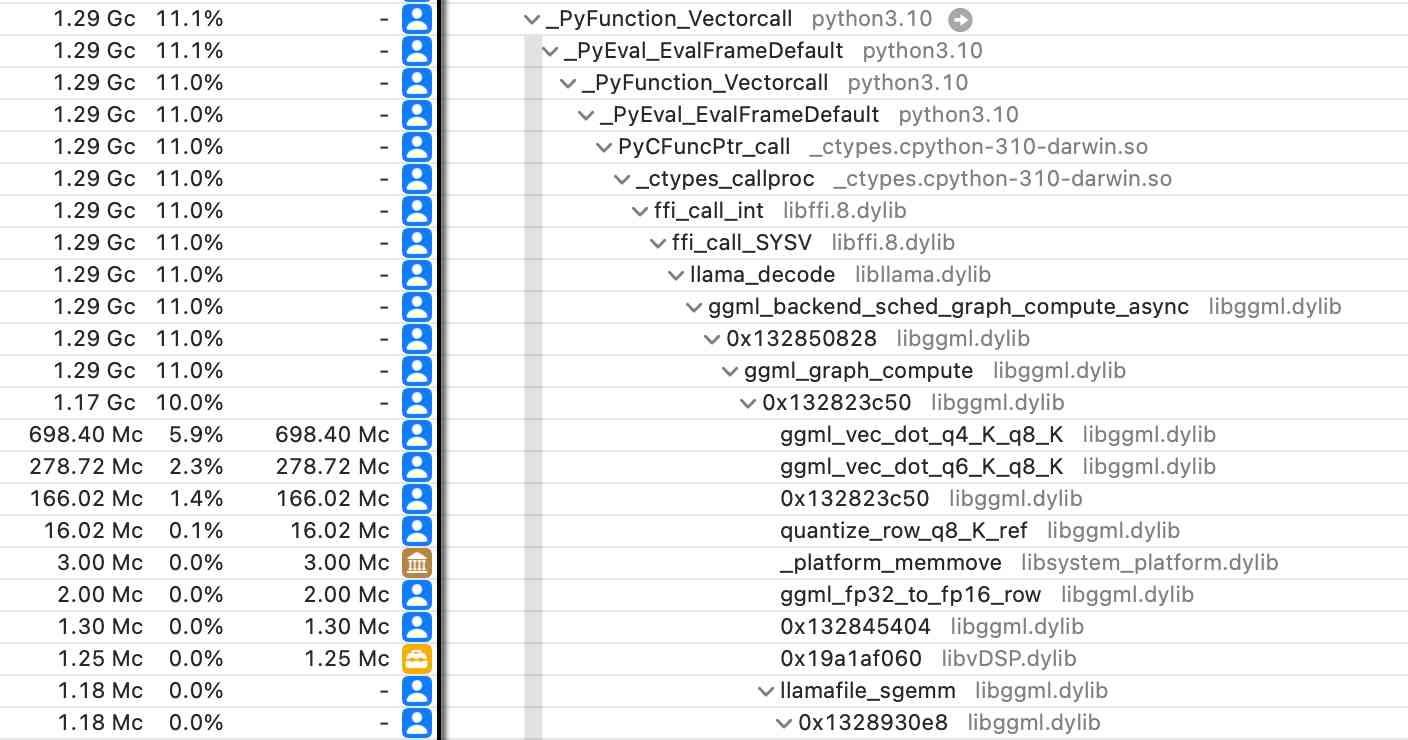

Thorsten Ball shows how the macOS Instruments app (installed as part of Xcode) can be used to run a CPU profiler against any application - not just code written in Swift/Objective C.I tried this against a Python process running LLM executing a Llama 3.1 prompt with my new llm-gguf plugin and captured this:

Via lobste.rs

Tags: observability, profiling, python

Quoting Amir Efrati and Aaron Holmes25 July 2024 | 9:35 pm

Our estimate of OpenAI’s $4 billion in inference costs comes from a person with knowledge of the cluster of servers OpenAI rents from Microsoft. That cluster has the equivalent of 350,000 Nvidia A100 chips, this person said. About 290,000 of those chips, or more than 80% of the cluster, were powering ChartGPT, this person said.

— Amir Efrati and Aaron Holmes

Tags: generative-ai, openai, chatgpt, ai, llms